mdk. Arcade rom and information repository for mame

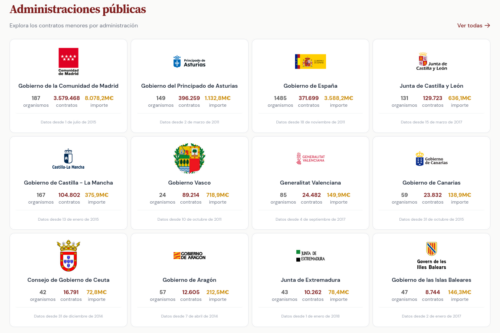

Las administraciones públicas están obligadas a publicar sus contratos menores. A lo que no están obligadas es a hacerlo fácil. Plataformas que fallan, formatos imposibles, límites técnicos absurdos para complicarte la vida. Esta herramienta existe para que no tengas que pelearte con un sistema diseñado para que te rindas. Tu dinero, tu derecho a saber.

Link rot (also called link death, link breaking, or reference rot) is the phenomenon of hyperlinks tending over time to cease to point to their originally targeted file, web page, or server due to that resource being relocated to a new address or becoming permanently unavailable. A link that no longer resolves at the intended target may be called broken or dead.

The rate of link rot is a subject of study and research due to its significance to the internet’s ability to preserve information. Estimates of that rate vary dramatically between studies. Information professionals have warned that link rot could make important archival data disappear, potentially impacting the legal system and scholarship.

El programa medialabmadrid ocurrió entre el 2002 y 2006 bajo la dirección de Karin Ohlenschläger y Luis Rico. Se concibe como un laboratorio abierto al diálogo entre las más diversas prácticas artísticas, científicas y tecnológicas. La estructura modular y abierta de este programa se articula en torno a cinco áreas de actividades interconectadas: Arte, Ciencia, Tecnología, Sociedad y Sostenibilidad (ACTS+S)

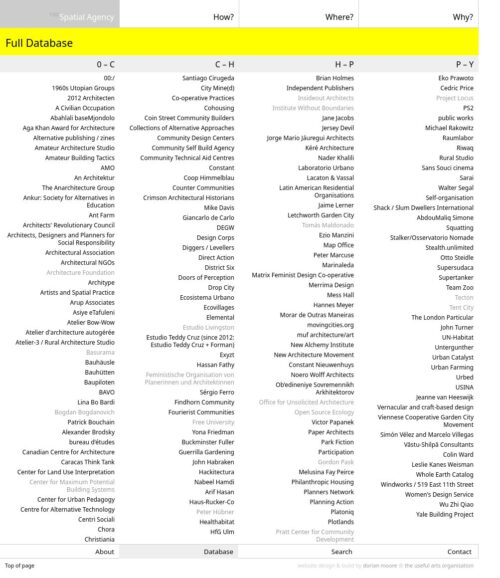

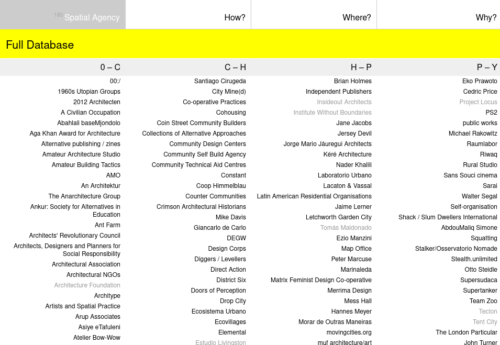

Spatial Agency is a project that presents a new way of looking at how buildings and space can be produced. Moving away from architecture’s traditional focus on the look and making of buildings, Spatial Agency proposes a much more expansive field of opportunities in which architects and non-architects can operate. It suggests other ways of doing architecture.

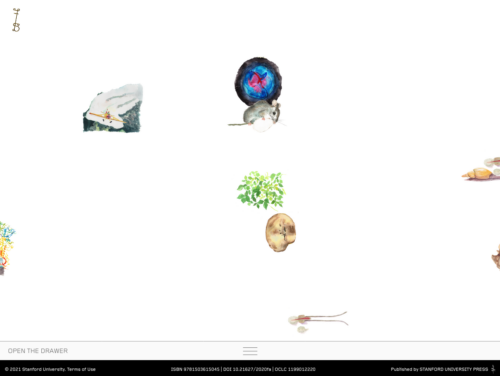

Feral Atlas invites you to navigate the land-, sea-, and airscapes of the Anthropocene. We trust that as you move through the site—pausing to look, read, watch, reflect, and perhaps occasionally scratch your head—you will slowly find your bearings, both in relation to the site’s structure and the foundational concerns and concepts to which it gives form. Feral Atlas has been designed to reward exploration. Following seemingly unlikely connections and thinking with a variety of media forms can help you to grasp key underlying ideas, ideas that are specifically elaborated in the written texts to be found in the “drawers” located at the bottom of every page.

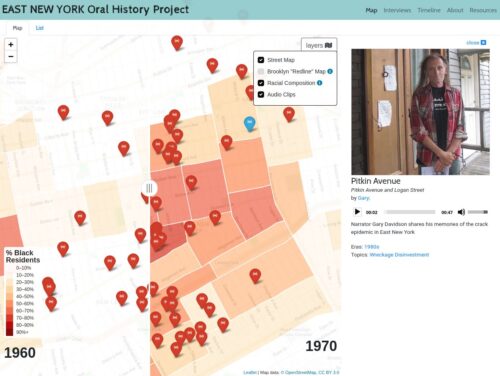

The East New York Oral History Project was designed to capture the personal experiences of people who lived in East New York from 1960 – 1970, during the time in which East New York rapidly changed from a primarily White to primarily Black and Latino community.

Archive Team is a loose collective of rogue archivists, programmers, writers and loudmouths dedicated to saving our digital heritage. Since 2009 this variant force of nature has caught wind of shutdowns, shutoffs, mergers, and plain old deletions – and done our best to save the history before it’s lost forever. Along the way, we’ve gotten attention, resistance, press and discussion, but most importantly, we’ve gotten the message out: IT DOESN’T HAVE TO BE THIS WAY.

This website is intended to be an offloading point and information depot for a number of archiving projects, all related to saving websites or data that is in danger of being lost. Besides serving as a hub for team-based pulling down and mirroring of data, this site will provide advice on managing your own data and rescuing it from the brink of destruction.

Launched in 2007, Data Center Map was the first research tool of its kind. We operate a global data center database, mapping data center locations worldwide. Our intention is to make it easier for buyers, sellers, investors, regulators and other professionals in the data center industry, to gain insights in to the data center markets of their interest.

We currently have 7945 data centers listed, from 152 countries worldwide. Click on a country below, to explore its data center locations.

Our database contains lists of data center operators and service providers, offering colocation, cloud and connectivity.

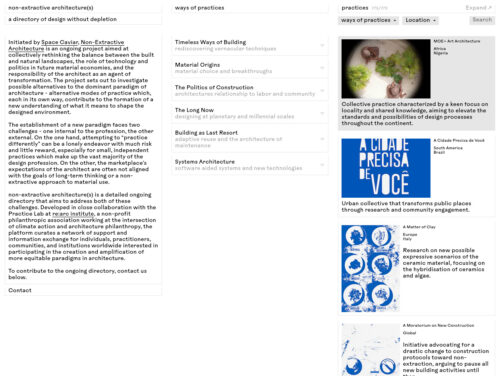

Initiated by Space Caviar, Non-Extractive Architecture is an ongoing project aimed at collectively rethinking the balance between the built and natural landscapes, the role of technology and politics in future material economies, and the responsibility of the architect as an agent of transformation.

« C’est une catastrophe, je suis déboussolé. » Robert ne mâche pas ses mots. « Aujourd’hui, Skyblog tue mon travail, sans aucune raison ». Patrice, quant à lui, raconte n’avoir « rien vu venir ». « Je suis abasourdi et déçu. Quand vous recevez près de 200 visites par jour, et qu’on apprécie le travail que vous faites, forcément, c’est frustrant ». Alexandre aussi raconte sa désillusion et déplore le manque de communication. « Personne n’a envoyé de mails aux utilisateurs. C’est un ami qui m’a prévenu, par qu’il avait vu la nouvelle par hasard ».

Parmi les irréductibles utilisateurs et utilisatrices de Skyblog, qui n’ont jamais cessé d’utiliser les blogs devenus au fils des années le symbole désuet de l’internet français du début des années 2000, la nouvelle de la fermeture du service a fait un choc. Le 23 juin, dans un post sur le blog officiel de l’équipe Skyblog, Pierre Bellanger, le fondateur et président historique de Skyrock, annonçait la fin du site. Pour des questions de législation, et pour « conserver la plateforme et les skyblogs dans leur présentation candide et éruptive », les blogs doivent être gelés, et retirés de l’accès public.

A material transfer agreement (MTA) is a contract that governs the transfer of tangible research materials between two organizations when the recipient intends to use it for his or her own research purposes. The MTA defines the rights of the provider and the rights and obligations of the recipient with respect to the materials and any progeny, derivatives, or modifications. Biological materials, such as reagents, cell lines, plasmids, and vectors, are the most frequently transferred materials, but MTAs may also be used for other types of materials, such as chemical compounds, mouse models, and even some types of software.

https://twitter.com/JaimeObregon/status/1645138600537858048?s=20

A headless Content Management System, or headless CMS, is a back end-only web content management system that acts primarily as a content repository. A headless CMS makes content accessible via an API for display on any device, without a built-in front end or presentation layer. The term ‘headless’ comes from the concept of chopping the ‘head’ (the front end) off the ‘body’ (the back end).

he Archigram archival project made the works of seminal experimental architectural group Archigram available free online for an academic and general audience. It was a major archival work, and a new kind of digital academic archive, displaying material held in different places around the world and variously owned. It was aimed at a wide online design community, discovering it through Google or social media, as well as a traditional academic audience. It has been widely acclaimed in both fields. The project has three distinct but interlinked aims: firstly to assess, catalogue and present the vast range of Archigram’s prolific work, of which only a small portion was previously available; secondly to provide reflective academic material on Archigram and on the wider picture of their work presented; thirdly to develop a new type of non-ownership online archive, suitable for both academic research at the highest level and for casual public browsing. The project hybridised several existing methodologies. It combined practical archival and editorial methods for the recovery, presentation and contextualisation of Archigram’s work, with digital web design and with the provision of reflective academic and scholarly material. It was designed by the EXP Research Group in the Department of Architecture in collaboration with Archigram and their heirs and with the Centre for Parallel Computing, School of Electronics and Computer Science, also at the University of Westminster. It was rated ‘outstanding’ in the AHRC’s own final report and was shortlisted for the RIBA research awards in 2010. It received 40,000 users and more than 250,000 page views in its first two weeks live, taking the site into twitter’s Top 1000 sites, and a steady flow of visitors thereafter. Further statistics are included in the accompanying portfolio. This output will also be returned to by Murray Fraser for UCL.

Nadie más adecuado para hablar de modelos de negocio en Internet que alguien que ha conseguido montar un negocio sostenible durante 13 años en un sector donde nadie más lo ha conseguido desde 2006: la prensa española.

Kike Garcia de la Riva, Co-Fundador de ElMundoToday (un medio sin banners publicitarios) nos hablará de las claves de su Éxito/Supervivencia

¿Qué podemos aprender de su experiencia para vivir de lo que hacemos en Internet?

“El éxito moderado se puede explicar por las habilidades y el trabajo. Un éxito enorme sólo es atribuible a la suerte”

(Nassim Nicholas Taleb)

When it comes to protecting your sensitive personal data, or your organization’s data, the different terminology and jargon can often be an issue.

This is why we’ve compiled this A-Z data privacy glossary s in the hopes that it might help you improve your data security.

What happens to architecture after the climate emergency undoes its foundational assumptions of growth, extraction, and progress?

Living with the climate emergency demands systemic change across economic, behavioural, and social structures, with profound implications for approaches to the built environment and spatial production. Architecture after Architecture examines how spatial practices might respond to such challenges, going beyond technical fixes, by addressing the cultural and socioeconomic factors that influence how we make, occupy, and share space.

There is also a different type of PDF known as PDF/A. PDF/A is a subset of PDF that is meant for archiving information. In order to preserve the information in the file and to ensure that the contents will still appear as it should even after a very long time of storage, PDF/A sets stricter standard than those used by PDF.

PDF/A is a special type of PDF meant for archiving documents

PDF/A does not allow audio, video, and executable content while PDF does

PDF/A requires that graphics and fonts be embedded into the file while PDF does not

PDF/A does not allow external references while PDF does

PDF/A does not allow encryption while PDF does

Spatial Agency is a project that presents a new way of looking at how buildings and space can be produced. Moving away from architecture’s traditional focus on the look and making of buildings, Spatial Agency proposes a much more expansive field of opportunities in which architects and non-architects can operate. It suggests other ways of doing architecture.

I used wget, which is available on any linux-ish system (I ran it on the same Ubuntu server that hosts the sites).

wget –mirror -p –html-extension –convert-links -e robots=off -P . http://url-to-site

That command doesn’t throttle the requests, so it could cause problems if the server has high load. Here’s what that line does:

–mirror: turns on recursion etc… rather than just downloading the single file at the root of the URL, it’ll now suck down the entire site.

-p: download all prerequisites (supporting media etc…) rather than just the html

–html-extension: this adds .html after the downloaded filename, to make sure it plays nicely on whatever system you’re going to view the archive on

–convert-links: rewrite the URLs in the downloaded html files, to point to the downloaded files rather than to the live site. this makes it nice and portable, with everything living in a self-contained directory.

-e robots=off: executes the “robots off” command, telling wget to ignore any directive to ignore the site in question. This is strictly Not a Good Thing To Do, but if you own the site, this is OK. If you don’t own the site being archived, you should obey all robots.txt files or you’ll be a Very Bad Person.

-P .: set the download directory to something. I left it at the default “.” (which means “here”) but this is where you could pass in a directory path to tell wget to save the archived site. Handy, if you’re doing this on a regular basis (say, as a cron job or something…)

http://url-to-site: this is the full URL of the site to download. You’ll likely want to change this.

The Web ARChive (WARC) archive format specifies a method for combining multiple digital resources into an aggregate archive file together with related information. The WARC format is a revision of the Internet Archive’s ARC_IA File Format[4] that has traditionally been used to store “web crawls” as sequences of content blocks harvested from the World Wide Web. The WARC format generalizes the older format to better support the harvesting, access, and exchange needs of archiving organizations. Besides the primary content currently recorded, the revision accommodates related secondary content, such as assigned metadata, abbreviated duplicate detection events, and later-date transformations.

From the discussion about Working with ARCHIVE.ORG, we learn that it is important to save not just files but also HTTP headers.

To download a file and save the request and response data to a WARC file, run this:

wget “http://www.archiveteam.org/” –warc-file=”at”

This will download the file to index.html, but it will also create a file at-00000.warc.gz. This is a gzipped WARC file that contains the request and response headers (of the initial redirect and of the Wiki homepage) and the html data.

If you want to have an uncompressed WARC file, use the –no-warc-compression option:

wget “http://www.archiveteam.org/” –warc-file=”at” –no-warc-compression

When IA first started doing their thing, they came across a problem: how do you actually save all of the information related to a website as it existed at a point in time? IA wanted to capture it all, including headers, images, stylesheets, etc.

After a lot of revision the smart folks there built a specification for a file format named WARC, for Web ARCive. The details aren’t super important, but the gist is that it will preserve everything, including headers, in a verifiable, indexed, checksumed format.

wget –recursive –convert-links -mpck –html-extension –user-agent=”Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/88.0.4324.146 Safari/537.36.” -e robots=off site.com

The goal of this article is to explain our experience, as Society of Catalan Archivists and Records Managers (AAC) members, in the field of social web archiving. To that end, we have structured it in three main parts, the first of which is to show the importance of archival science as a political tool in the framework of the information society. The second part focuses on the path followed by the AAC from its first steps taken to preserve social web hashtags, in order to gain technical expertise, to the reflection on the theoretical background required to go beyond the mere collection of social web content that led us to the definition of a new type of archival fonds: the social fonds. Finally, the third part sets out the case study of #Cuéntalo. Thanks to our previous experiences, this hashtag, created to denounce male violence, enabled us to design a more robust project that not only included the gathering and preservation of data but also a vast auto-categorisation exercise using a natural language processing algorithm that assisted in the design of a dynamic and startling data visualisation covering the 160,000 original tweets involved.

ArchiveBox is a powerful self-hosted internet archiving solution written in Python 3. You feed it URLs of pages you want to archive, and it saves them to disk in a varitety of formats depending on the configuration and the content it detects. ArchiveBox can be installed via Docker (recommended), apt, brew, or pip. It works on macOS, Windows, and Linux/BSD (both armv7 and amd64).

Do you want to share the links you discover? Shaarli is a minimalist bookmark manager and link sharing service that you can install on your own server. It is designed to be personal (single-user), fast and handy.

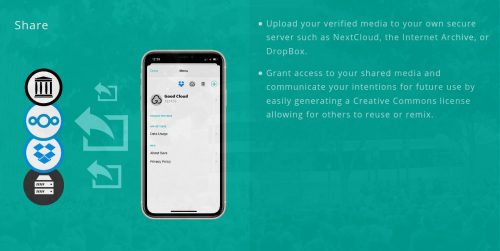

Now, more than ever, capturing media on mobile phones plays a key role in exposing global injustice.

OpenArchive helps individuals around the world to securely store and share the critical evidence they’ve captured.

Save

Share · Archive · Verify · Encrypt

Save is a new mobile media app designed by OpenArchive to help citizen reporters and eyewitnesses around the world preserve, protect, and amplify what they’ve documented.

SOLIVID es un proyecto colectivo para la construcción de un mapa colaborativo y de un banco de recursos on-line sobre las iniciativas solidarias frente a la crisis del COVID-19.

Todos datos que recojamos seran de acceso abierto.

The Unified Modeling Language (UML) is a general-purpose, developmental, modeling language in the field of software engineering that is intended to provide a standard way to visualize the design of a system.

Digitization of cultural heritage over last 20 years has opened up very interesting possibilities for the study of our cultural past using computational “big data” methods. Today, as over two billion people create global “digital culture” by sharing their photos, video, links, writing posts, comments, ratings, etc., we can also use the same methods to study this universe of contemporary digital culture.

In this chapter I discuss a number of issues regarding the “shape” of the digital visual collections we have, from the point of view of researchers who use computational methods. They are working today in many fields including computer science, computational sociology, digital art history, digital humanities, digital heritage and Cultural Analytics – which is the term I introduced in 2007 to refer to all of this research, and also to a particular research program of our own lab that has focused on exploring large visual collections.

Regardless of what analytical methods are used in this research, the analysis has to start with some concrete existing data. The “shapes” of existing digital collections may enable some research directions and make others more difficult. So what is the data universe created by digitization, what does it make possible, and also impossible?

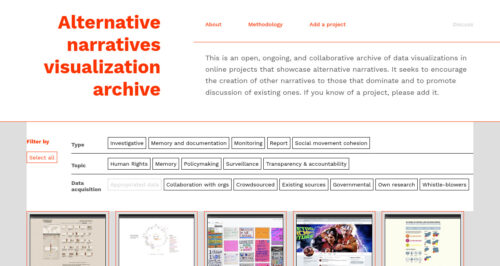

Modes of access and tools to manipulate data have brought marginalized actors to collaboratively create alternative narratives to those delivered by dominant power structures. Non-profit organizations and activist groups increasingly base their campaigns on data, using visualization as an agency tool for change. Data-driven alternative narratives counteract the hegemony of information, questioning the status quo and promoting the non-flattening of the data society, seeking to strengthen democracy. Data visualization is a decisive adversarial tool (DiSalvo, 2012) for turning data into alternative narratives. Translating data into visual representations for alternative narratives is an activist practice that requires a critical approach to data to make a political position evident and coherent.

This is an open, ongoing, and collaborative archive of data visualizations in online projects that showcase alternative narratives. It seeks to encourage the creation of other narratives to those that dominate and to promote discussion of existing ones. If you know of a project, please add it.

On Monday, the blogging platform Tumblr announced it would be removing all adult content after child pornography was discovered on some blogs hosted on the site. Given that an estimated one-quarter of blogs on the platform hosted at least some not safe for work (NSFW) content, this is a major content purge. Although there are ways to export NSFW content from a Tumblr page, Tumblr’s purge will inevitably result in the loss of a lot of adult content. Unless, of course, Reddit’s data hoarding community has anything to say about it.

On Wednesday afternoon, the redditor u/itdnhr posted a list of 67,000 NSFW Tumblrs to the r/Datasets subreddit. Shortly thereafter, they posted an updated list of 43,000 NSFW Tumblrs (excluding those that were no longer working) to the r/Datahoarders subreddit, a group of self-described digital librarians dedicated to preserving data of all types.

The Tumblr preservation effort, however, poses some unique challenges. The biggest concern, based on the conversations occurring on the subreddit is that a mass download of these Tumblrs is liable to also contain some child porn. This would put whoever stores these Tumblrs at serious legal risk.

Still, some data hoarders are congregating on Internet Relay Chat (IRC) channels to strategize about how to pull and store the content on these Tumblrs. At this point, it’s unclear how much data that would represent, but one data hoarder estimated it to be as much as 600 terabytes.

Trying to preserve the blogosphere’s favorite nude repository is a noble effort, but doesn’t change the fact that Tumblr’s move to ban adult content will deal a serious blow to sex workers around the world. Indeed, the entire debacle is just another example of how giant tech companies like Apple continue to homogenize the internet and are the ultimate arbiters of what can and cannot be posted online.

Hello and welcome! We (Matt Stempeck, Micah Sifry of Civic Hall, and Erin Simpson, previously of Civic Hall Labs and now at the Oxford Internet Institute) put this sheet together to try to organize the civic tech field by compiling hundreds of civic technologies and grouping them to see what patterns emerge. We started doing this because we think that a widely-used definition and field guide would help us: share knowledge with one another, attract more participation in the field, study and measure impact, and move resources in productive directions. Many of these tools and social processes are overlapping: our categories are not mutually exclusive nor collectively exhaustive.

wget --recursive --no-clobber --page-requisites --html-extension --convert-links --domains website.org --no-parent www.website.org/tutorials/html/

This command downloads the Web site www.website.org/tutorials/html/.

The options are:

–recursive: download the entire Web site.

–domains website.org: don’t follow links outside website.org.

–no-parent: don’t follow links outside the directory tutorials/html/.

–page-requisites: get all the elements that compose the page (images, CSS and so on).

–html-extension: save files with the .html extension.

–convert-links: convert links so that they work locally, off-line.

–no-clobber: don’t overwrite any existing files (used in case the download is interrupted and resumed).

Collective Housing Atlas aims to create an online library organized by a large number of categories, which makes it a powerful search engine of references about collective housing.