GPTZero. AI detector made to Preserve what’s human

GPTZero detects AI content from ChatGPT, GPT-5, Gemini, and checks writing quality to make every word worth reading.

GPTZero detects AI content from ChatGPT, GPT-5, Gemini, and checks writing quality to make every word worth reading.

Looking at costs this way tells me that Warp is operating at a huge loss. I live in Amsterdam so my monthly energy bill would be around 2k, yet I pay Warp no more than 90 euros.

Which brings me to the following: isn’t it cheaper to just hire a programmer from a low-wage country?

Not cheaper for me, mind you, I see the nominal difference. Cheaper for the environment maybe?

Is it?

Hiring a full time skilled programmer in, say, India or Latin America, would cost an average of 2,000 euros a month. Remote, of course. But similar in that I would have a developer on a screen.

So yes, for the environment, it would be cheaper. Lot’s. The average cost of home energy is around 50 euros in those countries, multiple people live in a home.

As is pointed out before, AI is an energy guzzler. The way it’s done now is tremendously inefficient, generating a single query response could cost as much energy as lighting a room for an hour.

But it’s early days yet. Early high pressure steam engines were also very inefficient, and prone to blowing up and wounding, maiming and killing who knows how many operators. James Watt’s designs were much safer, using low pressure to achieve better results.

So here’s to hoping that sometime soon we’ll invent a better way. Until then, it’s good to know that using a coding agent is costing someone, but probably not you, as much as hiring a human.

A proposal to standardise on using an /llms.txt file to provide information to help LLMs use a website at inference time.

Large language models increasingly rely on website information, but face a critical limitation: context windows are too small to handle most websites in their entirety. Converting complex HTML pages with navigation, ads, and JavaScript into LLM-friendly plain text is both difficult and imprecise.

While websites serve both human readers and LLMs, the latter benefit from more concise, expert-level information gathered in a single, accessible location. This is particularly important for use cases like development environments, where LLMs need quick access to programming documentation and APIs.

We propose adding a /llms.txt markdown file to websites to provide LLM-friendly content. This file offers brief background information, guidance, and links to detailed markdown files.

The New York Times reports that Trump posted 19 fake images and videos during his 2024 presidential campaign, from the outright absurd to the more plausible—such as a woman wearing a “Swifties for Trump” T-shirt. Since his election he’s posted 28 AI images and videos, including self-portraits as a king and as the pope. For anyone worried that AI-generated imagery might become a routine part of political influence operations, that horse has left the stable.

A recent study from fact-checking site NewsGuard found chatbots responding to queries about the news with falsehoods 35 per cent of the time—roughly double the rate of a year prior.

Newsguard explains that the rise comes from AIs being programmed to answer questions even if they are uncertain if an answer is correct. A year ago, AI bots would refuse to answer questions about the news 31 per cent of the time, while during this more recent round of tests the bots never refused to answer.

The Sora app introduces a particularly potent feature: the ability for users to grant permission for their likeness to be used by friends in video creations. With just a few spoken words and head movements, the AI can capture a person’s digital twin, ready to be inserted into any scenario imaginable.

This technology erodes the foundational trust we once had in video evidence. Previously, seeing was believing. Now, every video clip must be viewed with a healthy dose of scepticism. The potential for misuse is immense, from personal harassment and character assassination to large-scale disinformation campaigns.

While there are discussions around invisible watermarks and detection software, we are in a race against our own creations. As a society, we are largely unprepared for a world in which we cannot trust our own eyes. This isn’t a distant, futuristic problem; it is here now. The immediate need for digital literacy education and robust verification standards has never been more urgent.

I have a bold hypothesis.

I think AI companies might be intentionally configuring their smart models to not solve problems quickly, particularly the coding requests, because if they did, their revenue would be severely impacted by quick and short solutions.

If the model over engineers, then I need to talk to it a lot to fix the problem, particularly with setups that use APIs, so they get way more tokens burnt. SO more revenue for them (or I need a more unlimited subscription).

Yea, I know, sounds insane. But think about your experience with code generation — wasn’t it significantly more efficient before the models became smart/thinking/whatever? How come these significantly better models produce significantly more over engineering, where the only benefit is burning tokens?

This is a great 100% free Tool I developed after uploading this video, it will allow you to choose an LLM and see which GPUs could run it… : https://aifusion.company/gpu-llm/

Min Hardware requirements (up to 16b q4 models) (eg. Llama3.1 – 8b)

RTX 3060 12GB VRAM : https://amzn.to/3M0HvsL

Intel i5 or AMD Ryzen 5

Intel i5 : https://amzn.to/3WGZtp3

Ryzen 5 : https://amzn.to/46IigoC

36GB RAM

1TB SSD : https://amzn.to/4cBebEd

Recommended Hardware requirements (up to 70b q8 models) (eg. Llama3.1 – 70b)

RTX 4090 24GB VRAM : https://amzn.to/3AjIHow

Intel i9 or AMD Ryzen 9

Intel i9 : https://amzn.to/3YCeLxW

AMD Ryzen 9 : https://amzn.to/3YIaUiT

48GB RAM

2TB SSD : https://amzn.to/3YFQ83A

Professional Hardware requirements (up to 405b and more) (eg. Llama3.1 – 405b)

Stack of A100 GPUs or A6000 GPUs

https://amzn.to/3yojZ5T

Enterprise grade CPUs

https://amzn.to/3YDgByw

https://amzn.to/4dEbfY2

OpenAI has protested a court order that forces it to retain its users’ conversations. The creator of the ChatGPT AI model objected to the order, which is part of a copyright infringement case against it by The New York Times and other publishers.

The news organizations argued that ChatGPT was presenting their content in its responses to the point where users were reading this material instead of accessing their paid content directly.

The publishers said that deleted ChatGPT conversations might show users obtaining this proprietary published content via the service.

“OpenAI is NOW DIRECTED to preserve and segregate all output log data that would otherwise be deleted on a going forward basis until further order of the Court (in essence, the output log data that OpenAI has been destroying), whether such data might be deleted at a user’s request or because of ‘numerous privacy laws and regulations’ that might require OpenAI to do so.”

El tema es que anoche con mi pareja charlando salió el tema de que ahora ya google te mete IA si o si, quieras o no quieras. Hicimos un par de pruebas y efectivamente: Si añades ciertas palabrotas se desactivan los resultados generados con IA.

…si quieres sentirte como un hacker has de vestir con capucha, teclear muy rápido (con un teclado mecánico gamer que haga mucho ruido) y sobre todo usar palabrotas al buscar en google.

Vibe coding (or vibecoding) is an approach to producing software by using artificial intelligence (AI), where a person describes a problem in a few natural language sentences as a prompt to a large language model (LLM) tuned for coding. The LLM generates software based on the description, shifting the programmer’s role from manual coding to guiding, testing, and refining the AI-generated source code.

Let’s start by briefly describing what fine-tuning is. It is all about adjusting a model to fit your specific needs by tweaking its weights. Imagine you’re dealing with legal documents where words like ‘consideration’ take on a whole new meaning compared to everyday speech or what the model has been trained on. Fine-tuning steps in to make sure the model gets these specialized terms right. It’s not just about words either — you can also set up the model to follow specific rules, like keeping answers short and to the point, or understanding your business needs to a deeper level. So, if you’re planning to deploy it in production, this process transforms a general-purpose model into something custom-built for your data.

The lightweight models, specifically the 1B and 3B ({N}B = N billion parameters, for positive integer N), are among the most interesting for a variety of reasons. They are relatively easy to run locally, unlike the larger versions of LLMs and Meta claims they can be smoothly deployed on hardware found in mobile devices. This opens the door to many applications, as language processing can be done locally, without data leaving the device. As a result, these models are not reliant on a stable internet connection and offer a more private handling of sensitive information. With these advantages, we can expect to see more tools like personal assistants running on our smartphones.

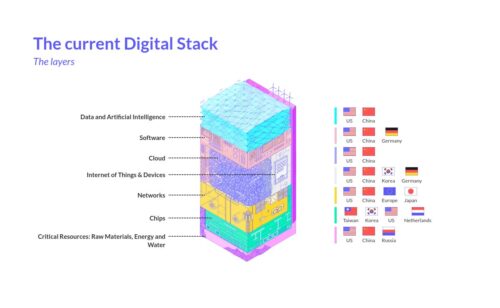

Sovereign AI, open-source ecosystems, green supercomputing, data commons, and sovereign cloud: Building homegrown alternatives to empower Europe to reclaim control of its digital future—layer by layer, innovation by innovation.

Interestingly, when I fed all my symptoms and medical data from before the rheumatologist visit into GPT, it suggested the same diagnosis I eventually received. After sharing this experience, I discovered many others facing similar struggles with fragmented medical histories and unclear diagnoses. That’s what motivated me to turn this into an open source tool for anyone to use. While it’s still in early stages, it’s functional and might help others in similar situations.

https://github.com/OpenHealthForAll/open-health

**What it can do:**

* Upload medical records (PDFs, lab results, doctor notes)

* Automatically parses and standardizes lab results:

– Converts different lab formats to a common structure

– Normalizes units (mg/dL to mmol/L etc.)

– Extracts key markers like CRP, ESR, CBC, vitamins

– Organizes results chronologically

* Chat to analyze everything together:

– Track changes in lab values over time

– Compare results across different hospitals

– Identify patterns across multiple tests

* Works with different AI models:

– Local models like Deepseek (runs on your computer)

– Or commercial ones like GPT4/Claude if you have API keys

**Getting Your Medical Records:**

If you don’t have your records as files:

– Check out [Fasten Health](https://github.com/fastenhealth/fasten-onprem) – it can help you fetch records from hospitals you’ve visited

– Makes it easier to get all your history in one place

– Works with most US healthcare providers

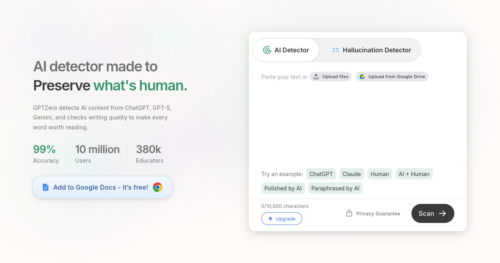

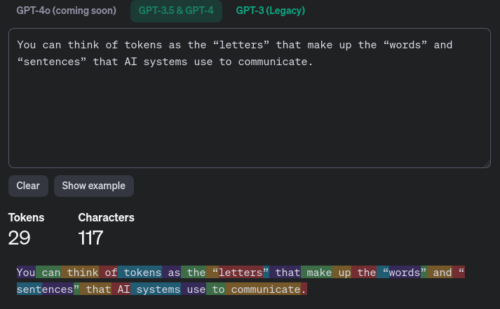

OpenAI’s large language models (sometimes referred to as GPT’s) process text using tokens, which are common sequences of characters found in a set of text. The models learn to understand the statistical relationships between these tokens, and excel at producing the next token in a sequence of tokens.

You can think of tokens as the “letters” that make up the “words” and “sentences” that AI systems use to communicate.

A helpful rule of thumb is that one token generally corresponds to ~4 characters of text for common English text. This translates to roughly ¾ of a word (so 100 tokens ~= 75 words).

The process of breaking text down into tokens is called tokenization. This allows the AI to analyze and “digest” human language into a form it can understand. Tokens become the data used to train, improve, and run the AI systems.

Why Do Tokens Matter? There are two main reasons tokens are important to understand:

Strategies for Managing Tokens

Because tokens are central to how LLMs work, it’s important to learn strategies to make the most of them:

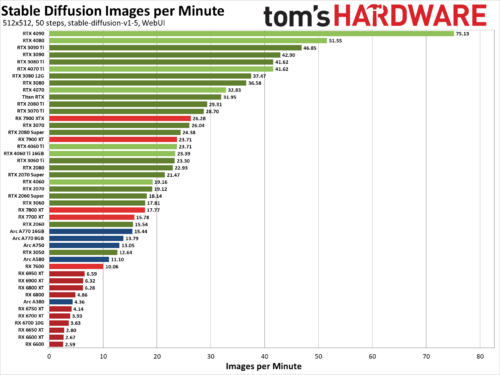

We’ve benchmarked Stable Diffusion, a popular AI image generator, on the 45 of the latest Nvidia, AMD, and Intel GPUs to see how they stack up. We’ve been poking at Stable Diffusion for over a year now, and while earlier iterations were more difficult to get running — never mind running well — things have improved substantially. Not all AI projects have received the same level of effort as Stable Diffusion, but this should at least provide a fairly insightful look at what the various GPU architectures can manage with AI workloads given proper tuning and effort.

The easiest way to get Stable Diffusion running is via the Automatic1111 webui project. Except, that’s not the full story. Getting things to run on Nvidia GPUs is as simple as downloading, extracting, and running the contents of a single Zip file. But there are still additional steps required to extract improved performance, using the latest TensorRT extensions. Instructions are at that link, and we’ve previous tested Stable Diffusion TensorRT performance against the base model without tuning if you want to see how things have improved over time. Now we’re adding results from all the RTX GPUs, from the RTX 2060 all the way up to the RTX 4090, using the TensorRT optimizations.

For AMD and Intel GPUs, there are forks of the A1111 webui available that focus on DirectML and OpenVINO, respectively. We used these webui OpenVINO instructions to get Arc GPUs running, and these webui DirectML instructions for AMD GPUs. Our understanding, incidentally, is that all three companies have worked with the community in order to tune and improve performance and features.

Physics has empiricism. If your physical theory doesn’t make a testable prediction, physicists will make fun of you. Those that do make a prediction are tested and adopted or refuted based on the evidence. Physics is trying to describe things that exist in the physical universe, so physicists have the luxury of just looking at stuff and seeing how it behaves.

Mathematics has rigor. If your mathematical claim can’t be broken down into the language of first order logic or a similar system with clearly defined axioms, mathematicians will make fun of you. Those that can be broken down into their fundamentals are then verified step by step, with no opportunity for sloppy thinking to creep in. Mathematics deals with ontologically simple entities, so it has no need to rely on human intuition or fuzzy high-level concepts in language.

Philosophy has neither of these advantages. That doesn’t mean it’s unimportant; on the contrary, philosophy is what created science in the first place! But without any way of grounding itself in reality, it’s easy for an unscrupulous philosopher to go off the rails. As a result, much of philosophy ends up being people finding justifications for what they already wanted to believe anyway, rather than any serious attempt to derive new knowledge from first principles. (Notice how broad the spread of disagreement is among philosophers on basically every aspect of their field, compared to mathematicians and physicists.)

This is not a big deal when philosophy is a purely academic exercise, but it becomes a problem when people are turning to philosophers for practical advice. In the field of artificial intelligence, things are moving quickly, and people want guidance about what’s to come. Should we consider AI to be a moral patient? Does moral realism imply that advanced AI will automatically prioritize humans’ best interests, or does the is-ought problem prevent that? What do concepts like “intelligence” and “values” actually mean?

Lanzada con poco bombo durante los primeros días de la pandemia, la serie de ocho capítulos DEVS es una de las ficciones de los últimos años con más posibilidades de convertirse en un futuro clásico de culto. Ambientada en un Silicon Valley crepuscular profundamente melancólico, esta historia del cineasta británico Alex Garland no trata directamente sobre la inteligencia artificial: su argumento traza una fábula sobre la computación cuántica, el destino frente al libre albedrío, y la posibilidad de reconstruir cada momento único de la experiencia humana. A Jorge Luis Borges probablemente le habría entusiasmado.

La historia se ha contado mil veces. Si tuviésemos que explicar los orígenes del ideario intelectual de la industria tecnológica –de lo que Richard Barbrook y Andy Cameron llamaron “la ideología californiana”– sus componentes fundamentales son el improbable encuentro hace seis décadas al sur de San Francisco entre hippies e ingenieros informáticos; entre una visión tecnocrática heredada del complejo industrial-militar de la guerra fría, y los deseos de emancipación colectiva y liberación de la consciencia de la contracultura. El legendario Whole Earth Catalog de Stewart Brand (la publicación seminal de la cultura digital), las propuestas del visionario arquitecto Buckminster Fuller, los experimentos de convivencia planteados en comunas como Drop City… fueron caldo de cultivo para emprendedores que como Steve Jobs imaginaron un futuro cercano en que el PC era tanto un acelerador de la eficiencia como una herramienta para la realización personal y la autonomía creativa. Una prótesis intelectual, una “bicicleta de la mente” que nos permitiría llegar a donde no seríamos capaces como especie exclusivamente biológica.

La industria tecnológica se sitúa hoy en su momento más existencial desde al menos los años 90, con la emergencia de la Internet comercial. El movimiento pro ética de la IA cree que los posibles riesgos del Deep Learning y las redes neuronales requieren de un desarrollo controlado y cuidadoso que permita su introducción paulatina en todos los aspectos de la vida cotidiana. Los aceleracionistas defienden que estos miedos son conservadores y que el inevitable desarrollo de la IA traerá consigo una nueva era de prosperidad humana y crecimiento, soluciones al cambio climático y a enfermedades incurables.

…antes que una herramienta de trascendencia espiritual la IA será otro sistema de concentración de poder en un mundo en desigualdad creciente, si no cambiamos algunas de sus reglas fundamentales.

https://twitter.com/JaimeObregon/status/1645138600537858048?s=20

Whatever your thoughts on AI bots, you may want to take action on your own website to block ChatGPT from crawling, indexing, and using your website content and data.

Fashion brand Levi Strauss & Co has announced a partnership with digital fashion studio Lalaland.ai to make custom artificial intelligence (AI) generated avatars in what it says will increase diversity among its models.

WordPress plugin developers are adopting AI-powered tech and building it into their products, such as RankMath’s AI-generated suggestions for creating SEO-friendly content, WordPress.com’s experimental blocks for AI-generated images and content, and a Setary’s plugin that uses AI to write and bulk edit WooCommerce product descriptions. The wpfrontpage site is tracking these plugins but WordPress.org also lists dozens of plugins with AI, many of them created to write content or generate images.

Get an abbreviated description made by an AI of you based on your latest tweets. What do other users perceive from what you tweet?

YOLOv4 installation has for a while been very tricky to install…until today. I will show you how to install YOLOv4 TensorFlow running on video in under 5 minutes.

You can run this either on CPU or CUDA Supported GPU (Nvidia Only). I achieved 3 FPS on CPU and 30 FPS on GPU (1080Ti)

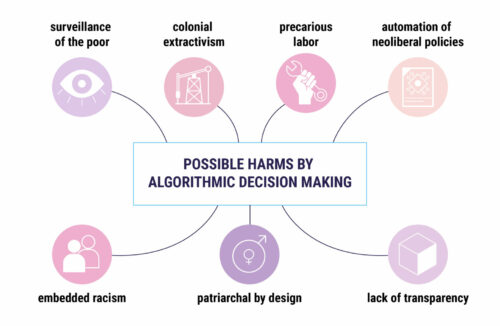

Feministai.net is an ongoing effort, work in progress debate that seeks to contribute to the development of a feminist framework to question algorithmic decisions making systems that are being deployed by the public sector. Our ultimate goal is to develop arguments and build bridges for advocacy with different human rights groups, from women’s rights defenders to LGBTIQ + groups, especially in Latin America to address the trend in which governments are adopting A.I. systems to deploy social policies, not only without considering human rights implications but also in disregard of oppressive power relations that might be amplified through a false sense of neutrality brought by automation. Automation of the status quo, pertained by inequalities and discrimination.

The current debate of A.I. principles and frameworks is mostly focused on “how to fix it?”, instead of to “why we actually need it?” and “for whose benefit”. Therefore, the first tool of our toolkit to question A.I. systems is the scheme of Oppressive A.I. that we drafted based on both, empirical analysis of cases from Latin America and bibliographic review of critical literature. Is a particular A.I system based on surveilling the poor? Is it automating neoliberal policies? Is it based on precarious labor and colonial extractivism of data bodies and resources from our territories? Who develops it is part of the group targeted by it or its likely to restate structural inequalities of race, gender, sexuality? Can the wider community have enough transparency to check by themselves the accuracy in the answers to the previous questions?

What is a feminist approach to consent? How can it be applied to technologies? Those simple questions were able to shed light on how limited is the individualistic notion of consent proposed in data protection frameworks.

Miquela nace en 2016. Un perfil de Instagram daba comienzo a su historia: una joven hispano-brasileña, residiendo en Los Ángeles y proyectando identidad de IT-Girl comenzaba su rastro digital suscitando todo tipo de especulaciones (como que era una campaña para promocionar el juego Los Sims). Después de tres años ya sabemos un poco de qué va la historia: “un estudio transmedia que crea universos narrativos y personajes digitales”. Esto es lo que puede leerse en la escueta web (es un Google Doc en realidad) de presentación de Brud.

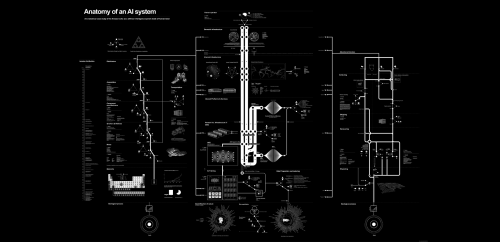

The Amazon Echo as an anatomical map of human labor, data and planetary resources

Yet in the twenty-first century, power will be determined not by one’s nuclear arsenal, but by a wider spectrum of technological capabilities based on digitization. Those who aren’t at the forefront of artificial intelligence (AI) and Big Data will inexorably become dependent on, and ultimately controlled by, other powers. Data and technological sovereignty, not nuclear warheads, will determine the global distribution of power and wealth in this century. And in open societies, the same factors will also decide the future of democracy.

The most important issue facing the new European Commission, then, is Europe’s lack of digital sovereignty. Europe’s command of AI, Big Data, and related technologies will determine its overall competitiveness in the twenty-first century. But Europeans must decide who will own the data needed to achieve digital sovereignty, and what conditions should govern its collection and use.

In the broadest sense, AI refers to machines that can learn, reason, and act for themselves. They can make their own decisions when faced with new situations, in the same way that humans and animals can.

As it currently stands, the vast majority of the AI advancements and applications you hear about refer to a category of algorithms known as machine learning. These algorithms use statistics to find patterns in massive amounts of data. They then use those patterns to make predictions on things like what shows you might like on Netflix, what you’re saying when you speak to Alexa, or whether you have cancer based on your MRI.

Google is reportedly working on an A.I.-based health and wellness coach.

Thanks to its spectrum of hardware products, Google would have a notable advantage over existing wellness coaching apps. While its coach, as reported, would primarily exist on smartwatches to start, Android Police noted that the company could include a smartphone counterpart as well. The company could also eventually spread it to Google Home or Android TV. The latter is unchartered territory for these kinds of apps, which are typically limited to smartphones and wearables. With availability in the home, lifestyle coaching recommendations could become increasingly contextual and less obtrusive. If you ask for a chicken parmesan dinner recipe, it could offer a healthier alternative instead; or if you’re streaming music at 10 p.m. and have set a goal to get more sleep, perhaps it could interrupt your music playback to remind you start getting ready for bed. A smartwatch or phone could do this too, of course, but by linking up its product ecosystem, Google could deliver helpful notifications in the context that makes the most sense.