One Thing Well

A weblog about simple, useful software.

A weblog about simple, useful software.

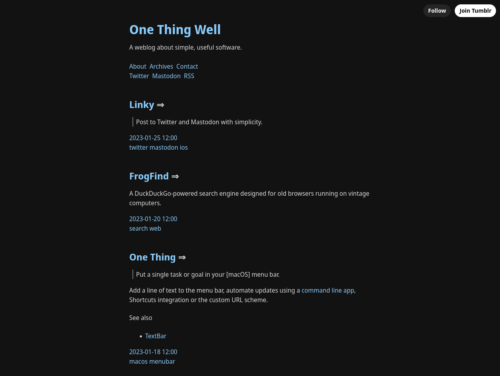

Vite is a blazing fast frontend build tool powering the next generation of web applications.

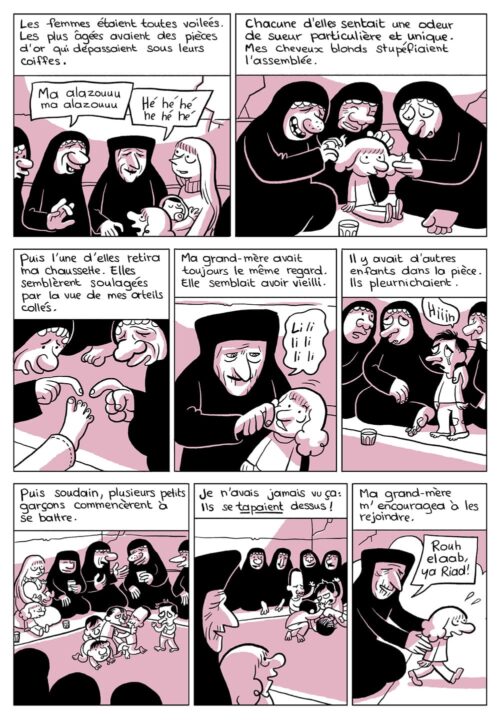

Dans le premier tome, Riad décrit la rencontre de ses parents à Paris, et leur installation en Libye, puis au village de Ter Maaleh en Syrie. Il pose les bases des thématiques principales de la série : l’image du père, le contexte géopolitique au Moyen-Orient de l’époque et le contraste entre les cultures et traditions européennes et orientales.

oklch() is a new way to define CSS colors. In oklch(L C H) or oklch(L C H / a), each item corresponds as follows:

L is perceived lightness (0–1). “Perceived” means that it has consistent lightness for our eyes, unlike L in hsl().C is chroma, from gray to the most saturated color.H is the hue angle (0–360).a is opacity (0–1 or 0–100%).The benefits of OKLCH:

hsl(), OKLCH is better for color modifications and palette generation. It uses perceptual lightness, so no more unexpected results, like we had with darken() in Sass.rgb() or hex (#ca0000), OKLCH is human readable. You can quickly and easily know which color an OKLCH value represents simply by looking at the numbers. OKLCH works like HSL, but it encodes lightness better than HSL.

The Conversation is a 1974 American neo-noir mystery thriller film written, produced, and directed by Francis Ford Coppola. It stars Gene Hackman as surveillance expert Harry Caul who faces a moral dilemma when his recordings reveal a potential murder.

ratgdo is a WiFi control board for your garage door opener that works over your local network using ESP Home or HomeKit.

At FULU, we believe that people should control the tech they bought and own.

If companies can modify internet-connected products and charge subscriptions after people have already purchased them, what does it mean to own anything anymore?

…Chamberlain started shutting down support for most third-party access to its MyQ servers. The company said it was trying to improve the reliability of its products. But this effectively broke connections that people had set up to work with Apple’s Home app or Google’s Home app, among others. Chamberlain also started working with partners that charge subscriptions for their services, though a basic app to control garage doors was still free.

While Mr. Wieland said RATGDO sales spiked after Chamberlain made those changes, he believes the popularity of his device is about more than just opening and closing a garage. It stems from widespread frustration with companies that sell internet-connected hardware that they eventually change or use to nickel-and-dime customers with subscription fees.

Too often, we are losing control of our personal technology, and the list of examples keeps growing. BMW made headlines in 2022 when it began charging subscriptions to use heated seats in some cars — a decision it reversed after a backlash. In 2021, Oura, the maker of a $350 sleep-tracking device, angered customers when it began charging a $6 monthly fee for users to get deeper analysis of their sleep. (Oura is still charging the fee.)

For years, some printer companies have required consumers to buy proprietary ink cartridges, but more recently they began employing more aggressive tactics, like remotely bricking a printer when a payment is missed for an ink subscription.

The activists and tinkerers rebelling against superfluous hardware subscriptions and fighting for device ownership are part of the broader “right to repair” movement, a consumer advocacy campaign that has focused on passing laws nationwide that require tech and appliance manufacturers to provide the tools, instructions and parts necessary for anyone to fix products, from smartphones to refrigerators.

In a perfect world, consumers would be able to load whatever software they wanted onto the hardware they owned. But the Digital Millennium Copyright Act, introduced in the 1990s to combat content piracy, made it potentially criminal to circumvent digital locks that companies embed into copyrighted software. That’s why we generally don’t see tinkerers publish tools that remove subscription requirements from heated car seats, sleep-tracking devices and printers, said Nathan Proctor, a director at U.S. PIRG, a consumer advocacy nonprofit.

Kyle Wiens, the chief executive of iFixit, a company that sells repair parts, offered this rule of thumb: Always opt for the “dumb” devices — the refrigerators, dishwashers, exercise bikes and coffee makers that lack a Wi-Fi connection or screen.

“That smart fridge would make your life worse in every way,” Mr. Wiens said.

Ana (Iria del Río) cumple 30 años el día de Año Nuevo con la vida aún por resolver: vive en un piso compartido, no le gusta su trabajo, y cambia a menudo de amigos. Óscar (Francesco Carril) cumple 30 años el día de Nochevieja con la vida casi resuelta: médico vocacional, amigos fieles, y una relación que va y viene. La noche en la que los dos cumplen los 30 se conocen, se enamoran, y comienzan una relación…

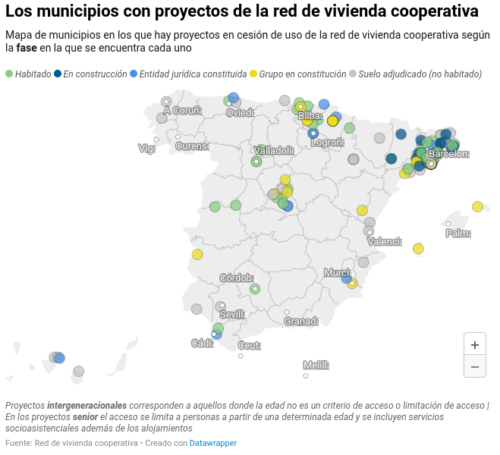

Según los datos de la Red de Vivienda Cooperativa, en todo el territorio español hay alrededor de 200 proyectos de este tipo en marcha, 53 de ellos habitados por cerca de un millar de personas como Déborah y su familia. Como se aprecia en el siguiente mapa, con datos de la red, la mayoría de estos proyectos se ubican en Catalunya, una región con gran tradición cooperativista, pero donde también ha habido un impulso político a esas herramientas residenciales, inicialmente en el ayuntamiento de Barcelona durante el mandato de la alcaldesa Ada Colau, a partir de 2015, que luego se fue extendiendo a otras corporaciones y a instancias autonómicas, con una normativa que da a estas entidades derecho de tanteo y retracto.

Much has been made in recent years about the ubiquity of screens and the negative effect they have on our lives. « Wall-E » takes this concept to an extreme as the passengers aboard the Axiom are never without a screen to look at. They zoom around the starship on hover chairs, a holo screen blocking their view of the world at large. When Wall-E inadvertently breaks two passengers out of their tech-coma, both are surprised to discover the ship has a pool.

All satire is a bit far-fetched, but in stretching to get a laugh « Wall-E » quietly comments on how often we serve technology instead of the other way around. Captain McCrea is purportedly in charge but is undercut and overruled by Auto, the ship’s AI, at every turn. When McCrea finally decides not to acquiesce to Auto’s demands, the AI locks the captain in his chambers. Only with this mutiny does McCrea recognize how much control he has handed over to the AI. This awakening mirrors what slowly happens across the Axiom as the passengers awake to how much of life they’ve been missing out on.

Shortly after Wall-E arrives on the Axiom, we get a glimpse of Captain McCrea’s personal quarters. Holograms of previous captains are projected on the wall. As the camera pans by, the obvious thing to note is just how much fatter each successive captain has gotten. However, the observant viewer learns an interesting tidbit that otherwise goes unnoted. The Axiom captains are all very long-lived. Their average years of service are 131 years, and that’s just time served!

Since we’re on the subject, let’s talk about those shakes. After Captain McCrea takes his position on the Axiom’s bridge, the computer runs through status updates on all of the ship’s major systems. Amid the sorts of stuff you’d expect — mechanical systems, reactor core, life support — there is one update that stands out because it is so wildly different: Regenerative food buffet. What?!

After Captain McCrea gets his morning status updates from the ship’s computer, he is alarmed to discover it’s already 12:30. He missed giving the morning announcements, which is the highlight of his otherwise boring day. It’s a problem McCrea solves for himself as he turns a time dial back three hours, to 9:30. This causes the artificial sun to change position in the sky and the lunch slurries to swap out for breakfast slushies. Those are the only material changes. Otherwise, life continues on as it was, as though time itself doesn’t actually matter.

Looking at costs this way tells me that Warp is operating at a huge loss. I live in Amsterdam so my monthly energy bill would be around 2k, yet I pay Warp no more than 90 euros.

Which brings me to the following: isn’t it cheaper to just hire a programmer from a low-wage country?

Not cheaper for me, mind you, I see the nominal difference. Cheaper for the environment maybe?

Is it?

Hiring a full time skilled programmer in, say, India or Latin America, would cost an average of 2,000 euros a month. Remote, of course. But similar in that I would have a developer on a screen.

So yes, for the environment, it would be cheaper. Lot’s. The average cost of home energy is around 50 euros in those countries, multiple people live in a home.

As is pointed out before, AI is an energy guzzler. The way it’s done now is tremendously inefficient, generating a single query response could cost as much energy as lighting a room for an hour.

But it’s early days yet. Early high pressure steam engines were also very inefficient, and prone to blowing up and wounding, maiming and killing who knows how many operators. James Watt’s designs were much safer, using low pressure to achieve better results.

So here’s to hoping that sometime soon we’ll invent a better way. Until then, it’s good to know that using a coding agent is costing someone, but probably not you, as much as hiring a human.

Para entender el Neopaquismo, primero hay que entender un poco lo que es el Paquismo.

El Paquismo es básicamente el diseño de interiores de los pisos Paco del Franquismo y la Transición (aprox. 1965-1985), las casas de los Boomers y early Generación X.

Se caracteriza por gotelé (para disimular defectos de construcción de las casas baratas), muebles durables de madera maciza, fotos de la familia (comuniones, bautizos, la boda) y displays de vajillas para las visitas.

La distribución del piso Paco es pasillera: cocina separada, habitaciones unidas al pasillo y un salón que es la parte central de la casa.

Pero bueno, hoy no vengo a hablaros del Paquismo, del que hay mucho que decir y podría hablar durante horas (me apasiona).

Hoy os vengo a hablar del NEOPAQUISMO.

El Neopaquismo es el tipo de interiorismo dominante en la vivienda de la generación tardo-millennial y proto-zoomer (nacidos aprox. 1988-2000). Se define principalmente por ser una negación frontal del Paquismo.

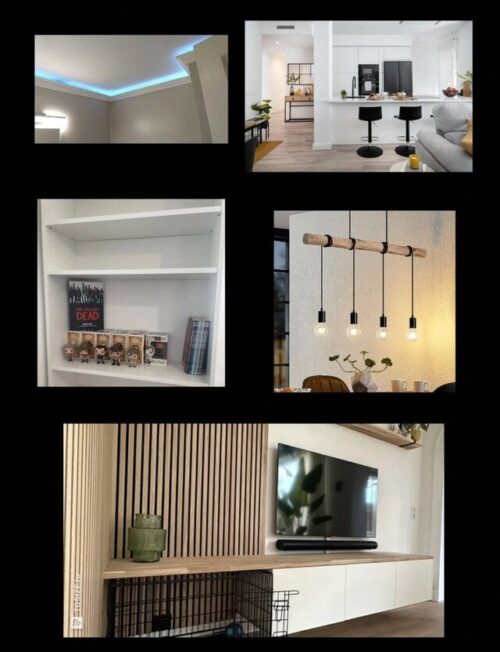

PRIMERO: MOBILIARIO DE IKEA La omnipresencia de Ikea es la piedra angular del Neopaquismo. Frente a los pesados muebles de madera del Paquismo, el Neopaquismo abraza el conglomerado. Muebles baratos, modulares y desmontables.

BILLY, KALLAX, MALM, BESTÄ: son los iconos de una generación que no puede permitirse muebles de madera maciza ni la certeza de vivir en el mismo piso dentro de dos años.

SEGUNDO: LA ELIMINACIÓN DEL GOTELÉ Y LOS SUELOS LAMINADOS

El gotelé, símbolo del Paquismo por excelencia, es el enemigo público número uno del Neopaquismo.

Lo primero que hace cualquier millennial al mudarse es arrancarlo, lijar y pintar de blanco impoluto.

Pero el gotelé no era solo estética cutre, era funcional. Su trabajo era disimular los defectos de obra de las casas baratas del franquismo y las trastadas de los niños. Al eliminar el gotelé, ahora cualquier roce deja marca. Son paredes que no toleran dedazos ni pintadas.

TERCERO: ALTAR A LA CULTURA POP Y LOS VIAJES

El Neopaquismo elimina las fotos de familia en marcos de plata sobre el mueble del salon (boda, bautizos, comuniones) y en su lugar erige un nuevo altar:

La vitrina del IKEA iluminada por tiras LED controladas por un mando o Alexa.

Los cuadros desaparecen de las paredes y son reemplazadas por láminas de Amazon, posters de conciertos o de series, y sobre todo, de recuerdos de viajes:

mapamundis para rascar, el cuchillo que compraste en Japón, a la figurita de madera de un elefante de tu viaje a Tailandia.

Es un reflejo de que el estatus ya no se mide por la posesión de bienes. ni por tu familia ni sus logros (por ej., las copas de los torneos de los hijos del Paquismo).

Ahora se mide por la acumulación de experiencias: haber estado en, haber visto, en general, haber consumido.

Aquí esta mucha de la clave del Neopaquismo:

La familia para el millennial ya no ocupa el centro de la vida. La casa no es un sitio donde criar a tus hijos (que probablemente no existen), sino un escenario donde representar esa identidad construida a traves de « experiencias ».

De este modo, la casa se convierte en una especie de fondo de Instagram en el que habitar temporalmente, antes de mudarse al siguiente piso de alquiler.

CUARTO: EL ESPACIO AUDIOVISUAL COMO CENTRO NEURÁLGICO

Si en el Paquismo el salón era el espacio de reunión familiar, en el Neopaquismo el salón se reorganiza completamente entrono al consumo audiovisual en solitario o en pareja.

La televisión ya no es un mueble más: es EL mueble. Al menos de 55 pulgadas, montada en la pared o sobre un mueble bajo de Ikea (BESTÄ, siempre BESTÄ), rodeada de dispositivos: la PlayStation, la Switch, el Apple TV, la barra de sonido, el router con sus lucecitas parpadeantes.

El sofá ya no mira a la mesa ni invita a la conversación. Mira directamente a la pantalla, en una disposición casi religiosa. Es el altar laico del Neopaquismo: Netflix, HBO, Disney+, Prime Video.

Mientras el Paquismo organizaba el espacio para la familia y las visitas, el Neopaquismo se organiza entorno al consumo audiovisual. No hay sillas extra, no hay espacio para más de tres personas. Porque apenas hay visitas, y si vienen, se saca el pouf.

QUINTO: LA COCINA AMERICANA Y LA ILUSIÓN DE ESPACIO

El Paquismo separaba la cocina del resto de la casa.

Un espacio funcional, cerrado. Se cocinaba de verdad: guisos, fritos, olores que no debían invadir el salón. La cocina era el territorio de la madre, un sitio de trabajo.

El Neopaquismo DERRIBA esa pared. La cocina americana es el símbolo máximo de la modernidad: espacio diáfano, integración visual, sensación de amplitud.

Una barra con taburetes separa (o no) la cocina del salón. Se trata de huir del ambiente pasillero y cerrado del Paquismo.

Pero aquí viene la trampa: la cocina americana funciona porque ya no se cocina de verdad. Se calienta, se ensambla, se pide. Meal prep los domingos, tuppers en la nevera, Glovo cuando no hay ganas. Nada que genere humo, nada que manche, nada que huela demasiado.

Un espacio diáfano exige una vida diáfana: limpia y ordenada. Sin niños ni comidas familiares que ensucien, en general, sin vida doméstica.

Y cuando por fin llegan los niños (si es que llegan), la cocina americana se convierte en una pesadilla: imposible contener el caos, el desorden y los olores que penetran en la gomaespuma del sofá. Se destruye la estética impoluta que prometía el render del arquitecto

SEXTO: EL DESPACHO Y LA AUSENCIA DE ESPACIOS INFANTILES

Los pisos Paco presuponían la existencia de niños; sus habitaciones estaban planificadas. En la vivienda Neo Paco este espacio se resignifica y se transforma en despachos (normalización del teletrabajo), « habitaciones de gaming » o simplemente, en trasteros de alta rotación.

Standing desk del IKEA, silla ergonómica/gaming, uno o dos monitores externos.

Y es que el « work from home » solo es viable en una casa sin niños, sin ruidos y sin caos.

El Neopaquismo permite el teletrabajo precisamente porque ha eliminado la vida doméstica del hogar.

Podría seguir. El Neopaquismo tiene más capas de las que caben en un hilo: las plantas de plástico (porque no conoces a tu vecino lo suficiente como para pedirle que te las riegue cuando te vas tres semanas de viaje), los sets de Lego expuestos como si fueran arte…

El air fryer como electrodoméstico fetiche (cocina rápida, para uno o dos, sin manchar, sin olores), las luces de neón con frases motivacionales (« Good vibes only », « But coffee first »)… Podría seguir, pero creo que ya se entiende el punto.

El Neopaquismo no es solo una elección estética libre.

Es la consecuencia inevitable de las condiciones materiales de nuestro tiempo: alquileres caros, contratos temporales, movilidad laboral forzosa, natalidad aplazada o directamente cancelada, y la atomización social.

Donde el Paquismo era feo pero honesto, una estética de clase trabajadora que aspiraba a la estabilidad, el Neopaquismo es bonito pero vacío.

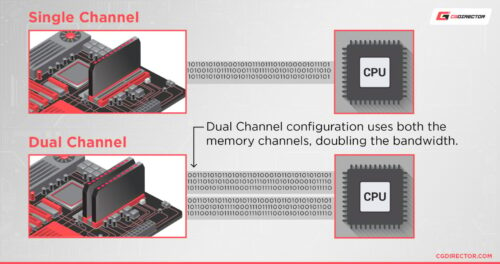

Dual-Channel RAM needs two matching slots on the motherboard to function properly.

But even when you’re activating Dual-Channel mode, you’re not actually doubling the speed of either of your RAM sticks. You are instead doubling their effective data rate.

La gallega tiene muy presente que el consumo de datos, que en el mundo real absorbe cantidades alarmantes de electricidad y agua potable, no para de crecer, incentivado por las grandes tecnológicas. Que con él se mutiplica la construcción de centros de datos que guardan los archivos de nuestros teléfonos y que conforman “la nube”. Y que la inteligencia artifical ha disparado este proceso y obligado a varias multinacionales a recular en sus compromisos medioambentables para los próximos años.

Sin embargo, a diferencia de otros activistas, Otero se niega a trasladarle la resposabilidad al usuario. Como arquitecta, prefiere rediseñar. El espacio digital puede repensarse, dice, en beneficio de los humanos y del planeta y no solo las grandes empresas; hacerlo un lugar finito y acotado. Desde la docencia y a través de una implicación personal cada vez mayor, ha presenciado algún tanto importante en América Latina. Ahora sigue con la vista puesta en lo que, para ella, es la gran victoria del marketing tecnológico: la idea de que internet es una nube etérea e intocable porque no tiene forma ni fronteras.

P. O sea, acotar internet.

R. Ya hay medidas en este sentido. Del derecho a la desconexión digital en el trabajo a pedir que los alumnos no tengan acceso a su móvil en clase. Estamos dándonos cuenta que un superuso del espacio digital trae problemas de salud mental, ecológicos y sociales. ¿Por qué tiene que estar todo tan basado en la velocidad, la alta resolución, el acceso 24 horas al día, independientemente de lo que ocurra en el planeta?

P. ¿Cómo define una relación adictiva con los datos?

R. Si buscas en tu teléfono las 20 últimas fotos que has guardado, esas imágenes van a decir mucho de tu relación con ellos. Posiblemente no serán fotos que te interese hacer, serán prácticamente muletas para no olvidar, como un pantallazo de algo que no vas a usar, pero por que tienes por si acaso. Todas esas informaciones, a menos que las borres, generalmente están asociadas a la nube y eso implica que están haciendo funcionar centros de datos y que tienen una vida bastante larga. Si encima las has mandado a tus contactos, están replicadas en centros de datos espejo. Toda esa información basura está consumiendo agua y energía.

P. ¿Cómo cree que está diseñado el sistema?

R. He tenido varias conversaciones sobre esto, por ejemplo, con una directora de investigación en Google. Le dije: “Cuando recibimos e-mails, ¿por qué la mayoría no desaparece a los cinco días, a los 10 días, a menos que los etiquetemos como importantes?”. Y esa persona se reía. “Me parece muy bonita esa idea, pero tienes que entender que a nosotros lo que nos interesa es acumular información. Preferimos invertir en comprimir esos datos que en tener menos”. Eso te da una idea de por qué cada vez nos ofrecen más espacio de almacenamiento. Yo entiendo a la gente. Guardamos los correos por si acaso. Pero, en mi experiencia, cuando he perdido acceso a una cuenta de e-mail porque he cambiado de trabajo, o he perdido un disco duro, no lo he vuelto a necesitar.

En Chile, trabajé en la comunidad de Cerrillo, que había conseguido pararle los pies a Google. Demostraron que el centro de datos [de 200 millones de dólares] que iban a instalar en su comunidad iba a utilizar prácticamente todo el agua potable del acuífero local. Google tuvo que dar marcha atrás.

docker manages single containers

docker-compose manages multiple container applications

A proposal to standardise on using an /llms.txt file to provide information to help LLMs use a website at inference time.

Large language models increasingly rely on website information, but face a critical limitation: context windows are too small to handle most websites in their entirety. Converting complex HTML pages with navigation, ads, and JavaScript into LLM-friendly plain text is both difficult and imprecise.

While websites serve both human readers and LLMs, the latter benefit from more concise, expert-level information gathered in a single, accessible location. This is particularly important for use cases like development environments, where LLMs need quick access to programming documentation and APIs.

We propose adding a /llms.txt markdown file to websites to provide LLM-friendly content. This file offers brief background information, guidance, and links to detailed markdown files.

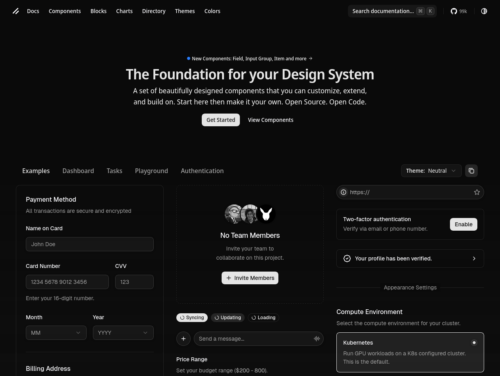

An open source component library optimized for fast development, easy maintenance, and accessibility. Just import and go—no configuration required.

A set of beautifully designed components that you can customize, extend, and build on. Start here then make it your own. Open Source. Open Code.

Object-Relational Mapping (ORM) is a technique that lets you query and manipulate data from a database using an object-oriented paradigm. When talking about ORM, most people are referring to a library that implements the Object-Relational Mapping technique, hence the phrase « an ORM ».

An ORM library is a completely ordinary library written in your language of choice that encapsulates the code needed to manipulate the data, so you don’t use SQL anymore; you interact directly with an object in the same language you’re using.

Hace tiempo que sentimos la urgencia de contar con sitios web que garanticen nuestra seguridad, que no sean censurables, que visibilicen nuestras luchas de manera coherente y cuyos contenidos puedan ser auto-gestionados.

¡Por eso desarrollamos Sutty!

Sutty incorpora las técnicas para sitios web que son vanguardia entre las élites tecnológicas y las acerca a nuestros objetivos políticos, volviéndolas accesibles para que nuestras colectivas expresen sus voces.

Sutty está pensada para potenciar la presencia, la seguridad y la libertad de expresión a organizaciones y colectivas activistas.

Somos una cooperativa latinoamericana, diversa e inclusiva y hacemos tecnología para los derechos humanos, LGBTTIQANB+ y ambientales desde una perspectiva de apropiación técnica, autonomía tecnológica y cuidados digitales.

Jérôme Fourquet, politologue, directeur du département Opinion à l’IFOP, co-auteur de la note « Tourisme 2.0 : anatomie de la France Airbnb » pour l’Institut Terram. Jessica Gourdon, journaliste au Monde. Co-auteure de l’enquête en six parties « L’ogre AirBnB ».

The New York Times reports that Trump posted 19 fake images and videos during his 2024 presidential campaign, from the outright absurd to the more plausible—such as a woman wearing a “Swifties for Trump” T-shirt. Since his election he’s posted 28 AI images and videos, including self-portraits as a king and as the pope. For anyone worried that AI-generated imagery might become a routine part of political influence operations, that horse has left the stable.

A recent study from fact-checking site NewsGuard found chatbots responding to queries about the news with falsehoods 35 per cent of the time—roughly double the rate of a year prior.

Newsguard explains that the rise comes from AIs being programmed to answer questions even if they are uncertain if an answer is correct. A year ago, AI bots would refuse to answer questions about the news 31 per cent of the time, while during this more recent round of tests the bots never refused to answer.

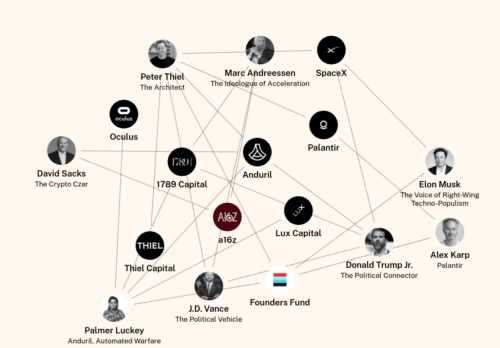

How Tech Billionaires Are Building a Post-Democratic America — And Why Europe Is Next

Under the banner of « patriotic tech », this new bloc is building the infrastructure of control—clouds, AI, finance, drones, satellites—an integrated system we call the Authoritarian Stack. It is faster, ideological, and fully privatized: a regime where corporate boards, not public law, set the rules.

…laptops are command interfaces. Phones are consumption portals. The distinction matters more than anyone admits.

Neither is inherently wrong. But when 80% of your computing time happens in consumption mode, something shifts in how you relate to digital systems. You stop seeing them as malleable, hackable, controllable. You start seeing them as environmental conditions—like weather patterns you adapt to rather than infrastructures you can reshape.

The generational split isn’t about capability. It’s about default stance. Generation Z sees phones as primary computers because phones are functionally complete for consumption-primary workflows. But consumption-primary means command-secondary. And command-secondary means power-secondary.

Real creation—the kind that shifts power dynamics—involves building new systems, not just feeding existing ones. Writing code that others will use. Designing tools that change workflows. Publishing research that alters understanding. Creating infrastructure, not just content.

…the actual writing, structuring, editing? That’s laptop work. The friction—managing files, handling git, processing images, structuring arguments across multiple editing sessions—that friction is generative. It forces deeper thinking. It enables system-level creation.

Both stances serve different purposes. The trap is unconscious default to one stance across all contexts. Because the stance becomes self-reinforcing. Consumption mode atrophies creation muscles. Creation mode can miss the forest for the trees of constant optimization.

The wisdom is flexibility—consciously choosing creation or consumption mode based on context, rather than being chosen by interface design decisions made by platform architects optimizing for their goals, not yours.

Depuis son implantation en France en 2012, Airbnb s’est imposée comme un acteur central de l’hébergement touristique. En une décennie, la plateforme – avec d’autres comme Booking ou Abritel – a profondément redessiné les pratiques de voyage. L’étude retrace cette expansion rapide : d’une présence ponctuelle dans les métropoles, les stations de ski et du littoral en 2013, elle s’est diffusée dès 2019 dans la quasi-totalité du territoire, épousant l’attractivité des grands sites patrimoniaux, des parcs naturels ou des littoraux, et connaissant une forte accélération post-Covid. Festivals, événements culturels et manifestations sportives créent des pics de réservations, révélant l’intégration des plateformes dans l’économie événementielle locale. L’analyse souligne aussi les tensions : rôle des résidences secondaires, concurrence ou complémentarité avec l’hôtellerie, effets sur le logement, mais aussi maintien de commerces de proximité. Côté usagers, un Français sur deux a déjà réservé via Airbnb ; les profils d’utilisateurs sont plus jeunes, plus diplômés, plus urbains que la moyenne, mais reflètent l’ensemble de la société. Les séjours sont courts (5 jours en moyenne), souvent en couple ou en famille, et motivés par des arbitrages budgétaires. Si les plateformes offrent souplesse et diversité, elles n’effacent pas toutes les inégalités d’accès aux vacances : classes modestes, jeunes femmes et ruraux restent les plus empêchés.

Dans les bourgs n’affichant aucune réservation via Airbnb, autrement dit les moins touristiques, le nombre moyen de commerces et services de proximité recensés n’atteint que 3,5. Dès lors qu’un flux minimal de visiteurs se manifeste sur la plateforme, cet indicateur progresse nettement pour dépasser 5 commerces et services de base en moyenne. La tendance se confirme avec l’intensité touristique : dans les communes où le volume de nuitées franchit les 10 000 en 2024, la moyenne grimpe à 7 commerces ou services essentiels. Dans les localités les plus attractives, où les nuitées dépassent 20 000 par an, elle franchit même le seuil symbolique des 10.

changeme picks up where commercial scanners leave off. It focuses on detecting default and backdoor credentials and not necessarily common credentials. It’s default mode is to scan HTTP default credentials, but has support for other credentials.

changeme is designed to be simple to add new credentials without having to write any code or modules. changeme keeps credential data separate from code. All credentials are stored in yaml files so they can be both easily read by humans and processed by changeme. Credential files can be created by using the ./changeme.py --mkcred tool and answering a few questions.

El programa medialabmadrid ocurrió entre el 2002 y 2006 bajo la dirección de Karin Ohlenschläger y Luis Rico. Se concibe como un laboratorio abierto al diálogo entre las más diversas prácticas artísticas, científicas y tecnológicas. La estructura modular y abierta de este programa se articula en torno a cinco áreas de actividades interconectadas: Arte, Ciencia, Tecnología, Sociedad y Sostenibilidad (ACTS+S)

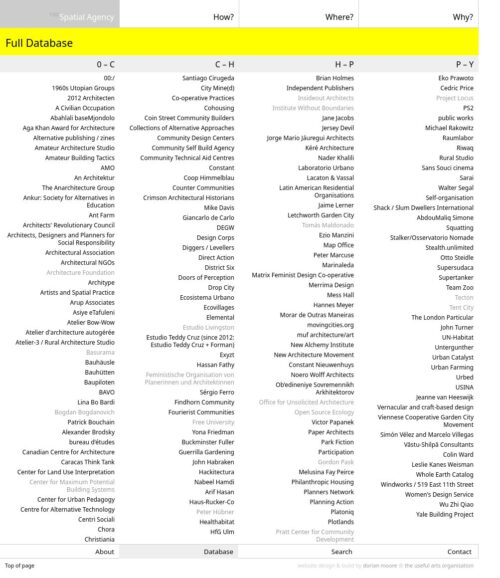

Spatial Agency is a project that presents a new way of looking at how buildings and space can be produced. Moving away from architecture’s traditional focus on the look and making of buildings, Spatial Agency proposes a much more expansive field of opportunities in which architects and non-architects can operate. It suggests other ways of doing architecture.

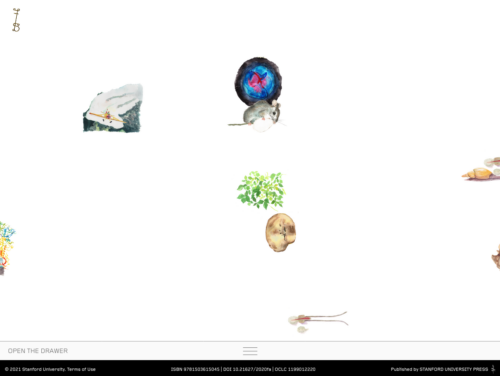

Feral Atlas invites you to navigate the land-, sea-, and airscapes of the Anthropocene. We trust that as you move through the site—pausing to look, read, watch, reflect, and perhaps occasionally scratch your head—you will slowly find your bearings, both in relation to the site’s structure and the foundational concerns and concepts to which it gives form. Feral Atlas has been designed to reward exploration. Following seemingly unlikely connections and thinking with a variety of media forms can help you to grasp key underlying ideas, ideas that are specifically elaborated in the written texts to be found in the “drawers” located at the bottom of every page.

The main repositories now contain both PHP 5.6, PHP 7.0-7.4 and PHP 8.0-8.3 coinstallable together.

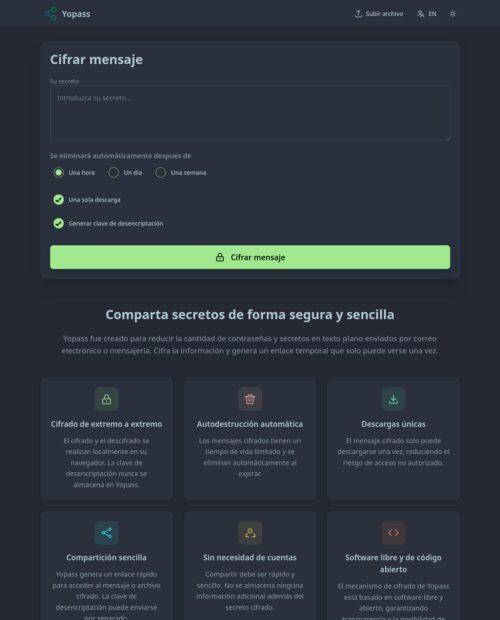

Yopass fue creado para reducir la cantidad de contraseñas y secretos en texto plano enviados por correo electrónico o mensajería. Cifra la información y genera un enlace temporal que solo puede verse una vez.

Naturopathie, magnétisme, psychologie énergétique… Depuis la crise sanitaire, les « médecines douces » prolifèrent. Un phénomène dangereux qui mène trop souvent à des dérives thérapeutiques, voire sectaires. Margot Brunet, journaliste spécialisée en sciences et santé, a enquêté sur ces charlatans dans son livre « Naturopathie. L’imposture scientifique » (éd. Les Échappés, parution le 9 octobre).

C’est tout le problème de cette nébuleuse, savoir comment les nommer. Si on évoque les « médecines douces », on leur donne un vernis scientifique, puisqu’on les fait entrer dans un cadre médical. On pourrait dire « pratiques non conventionnelles en santé », mais il faut reconnaître que c’est un peu long et, surtout, il s’agit davantage de bien-être que de santé. Quant à savoir ce qu’on met dedans, là aussi, c’est compliqué : on ne peut pas vraiment faire de liste de pratiques, puisque n’importe qui peut créer sa spécialité et choisir l’intitulé qu’il veut. Dans le livre, j’ai donc décidé de les définir comme toutes les pratiques non encadrées et non éprouvées scientifiquement qui se targuent d’avoir des effets sur la santé.

Pour beaucoup, se tourner vers les pseudo-thérapies est un acte de revendication, une opposition au système politico-sanitaire actuel. Les gens qui consultent ce genre de praticiens n’y vont pas particulièrement parce qu’ils sont déçus du système de santé, ils y vont parce qu’ils croient à un autre système de pensée.

D’autant que certaines pratiques sont désormais remboursées par les mutuelles, ce qui est un cercle vertueux pour ce marché : les gens paient plus cher leurs mutuelles pour avoir accès à ces médecines douces, et les pseudo-thérapeutes reçoivent plus de clients… qui viennent d’autant plus volontiers que les consultations sont remboursées par les mutuelles.