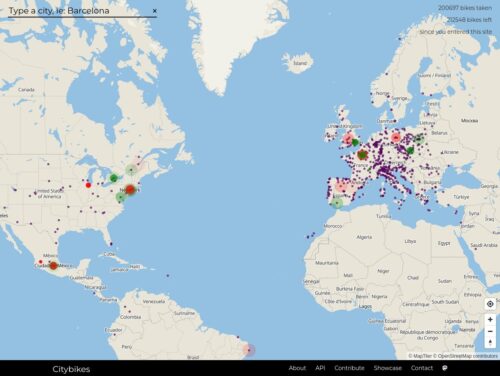

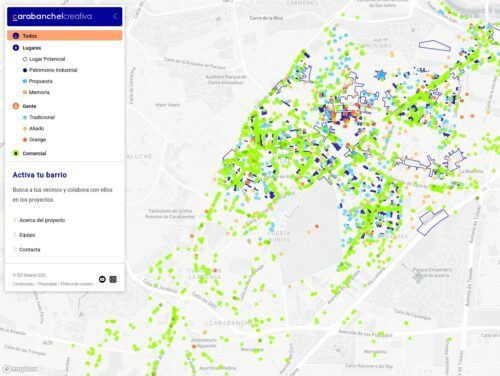

Mapping Millions: How to Cluster Thousands of Markers with Next.js, Leaflet, and Supercluster

The easier approach is to cluster the markers on the client-side, due to leaflet plugins like Leaflet.markercluster.

…client-side clustering has its limitations. In order to ake it work, you need to load all the markers at once! This is not a problem if you have a small number of markers, but when you have thousands or millions of markers, it can quickly become a performance bottleneck.

Backend clustering addresses this problem by processing the data on the server. Only clusters for the current viewport and zoom level are sent to the client. This minimizes bandwidth usage and reduces client-side processing. In my solution, I utilized Supercluster, a high-performance clustering library suitable for Leaflet maps.