La gallega tiene muy presente que el consumo de datos, que en el mundo real absorbe cantidades alarmantes de electricidad y agua potable, no para de crecer, incentivado por las grandes tecnológicas. Que con él se mutiplica la construcción de centros de datos que guardan los archivos de nuestros teléfonos y que conforman “la nube”. Y que la inteligencia artifical ha disparado este proceso y obligado a varias multinacionales a recular en sus compromisos medioambentables para los próximos años.

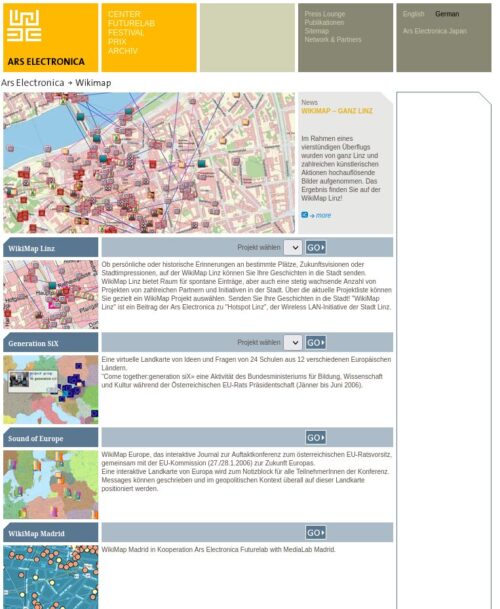

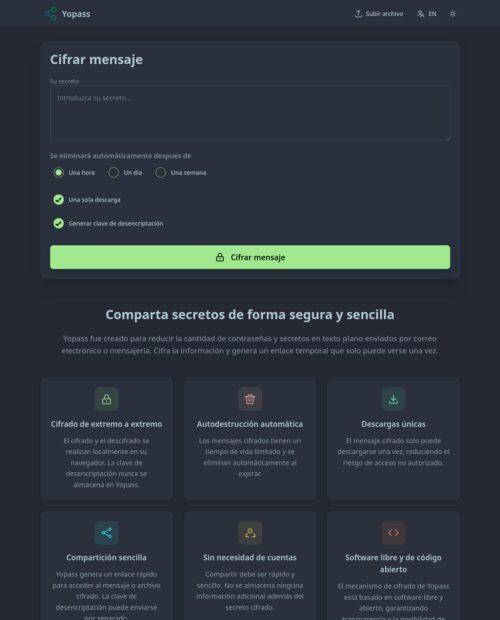

Sin embargo, a diferencia de otros activistas, Otero se niega a trasladarle la resposabilidad al usuario. Como arquitecta, prefiere rediseñar. El espacio digital puede repensarse, dice, en beneficio de los humanos y del planeta y no solo las grandes empresas; hacerlo un lugar finito y acotado. Desde la docencia y a través de una implicación personal cada vez mayor, ha presenciado algún tanto importante en América Latina. Ahora sigue con la vista puesta en lo que, para ella, es la gran victoria del marketing tecnológico: la idea de que internet es una nube etérea e intocable porque no tiene forma ni fronteras.

P. O sea, acotar internet.

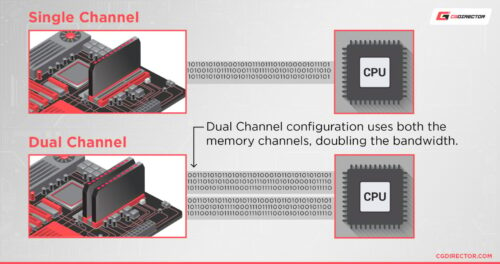

R. Ya hay medidas en este sentido. Del derecho a la desconexión digital en el trabajo a pedir que los alumnos no tengan acceso a su móvil en clase. Estamos dándonos cuenta que un superuso del espacio digital trae problemas de salud mental, ecológicos y sociales. ¿Por qué tiene que estar todo tan basado en la velocidad, la alta resolución, el acceso 24 horas al día, independientemente de lo que ocurra en el planeta?

P. ¿Cómo define una relación adictiva con los datos?

R. Si buscas en tu teléfono las 20 últimas fotos que has guardado, esas imágenes van a decir mucho de tu relación con ellos. Posiblemente no serán fotos que te interese hacer, serán prácticamente muletas para no olvidar, como un pantallazo de algo que no vas a usar, pero por que tienes por si acaso. Todas esas informaciones, a menos que las borres, generalmente están asociadas a la nube y eso implica que están haciendo funcionar centros de datos y que tienen una vida bastante larga. Si encima las has mandado a tus contactos, están replicadas en centros de datos espejo. Toda esa información basura está consumiendo agua y energía.

P. ¿Cómo cree que está diseñado el sistema?

R. He tenido varias conversaciones sobre esto, por ejemplo, con una directora de investigación en Google. Le dije: “Cuando recibimos e-mails, ¿por qué la mayoría no desaparece a los cinco días, a los 10 días, a menos que los etiquetemos como importantes?”. Y esa persona se reía. “Me parece muy bonita esa idea, pero tienes que entender que a nosotros lo que nos interesa es acumular información. Preferimos invertir en comprimir esos datos que en tener menos”. Eso te da una idea de por qué cada vez nos ofrecen más espacio de almacenamiento. Yo entiendo a la gente. Guardamos los correos por si acaso. Pero, en mi experiencia, cuando he perdido acceso a una cuenta de e-mail porque he cambiado de trabajo, o he perdido un disco duro, no lo he vuelto a necesitar.

En Chile, trabajé en la comunidad de Cerrillo, que había conseguido pararle los pies a Google. Demostraron que el centro de datos [de 200 millones de dólares] que iban a instalar en su comunidad iba a utilizar prácticamente todo el agua potable del acuífero local. Google tuvo que dar marcha atrás.